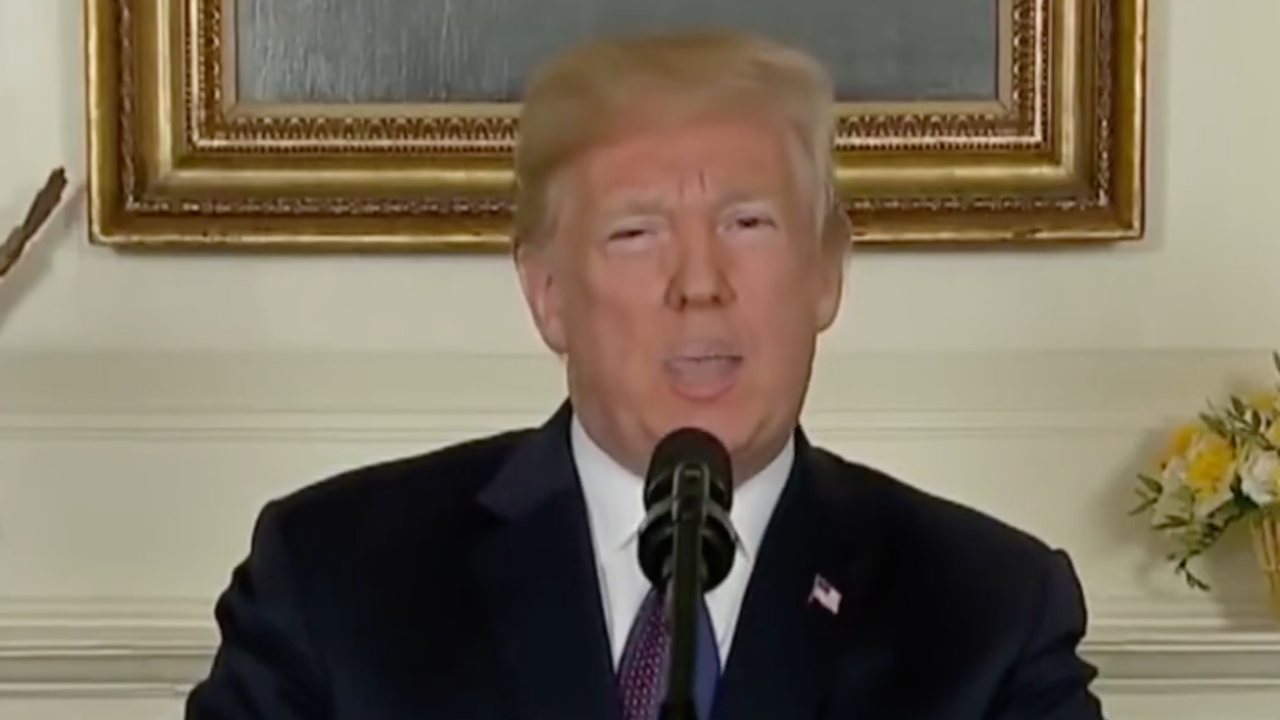

Trump speaking privately with Russian President Vladimir Putin during their last meeting in Helsinki, with Trump promising Putin that the United States would not defend certain North Atlantic Treaty Organization (NATO) allies in the event of Russian subversion. The information operation against Qatar in 2017, which attributed pro-Iranian views to Qatar’s emir, illustrates how significant fraudulent content can be even without credible audio and video.įor example, a credible deep fake audio file could emerge purporting to be a recording of President Donald J. They could be potent instruments of covert action campaigns and other forms of disinformation used in international relations and military operations, with potential for serious damage. The arrival of deep fakes has frightening implications for foreign affairs and national security. (As of August 2017, two-thirds of Americans reported to Pew that they get their news at least in part from social media.) This is fertile ground for circulating deep fake content. In the United States and many other countries, society already grapples with surging misinformation resulting from the declining influence of quality-controlled mass media and the growing significance of social media as a comparatively unfiltered, many-to-many news source. The information-sharing environment is well suited to the spread of falsehoods. Not long from now, robust tools of this kind and for-hire services to implement them will be cheaply available to anyone. In such an environment, it would take little sophistication and resources to produce havoc. Some degree of credible fakery is already within the reach of leading intelligence agencies, but in the coming age of deep fakes, anyone will be able to play the game at a dangerously high level.

#Mr deep fakes full

Similar work is likely taking place in various classified settings, but the technology is developing at least partially in full public view with the involvement of commercial providers. Most notably, academic researchers have developed “generative adversarial networks” (GANs) that pit algorithms against one another to create synthetic data (i.e., the fake) that is nearly identical to its training data (i.e., real audio or video). Advances in machine learning are driving this change. The looming era of deep fakes will be different, however, because the capacity to create hyperrealistic, difficult-to-debunk fake video and audio content will spread far and wide. The “appearance” of 1970s-vintage Peter Cushing and Carrie Fisher in Rogue One: A Star Wars Story is a recent example. Those with resources-like Hollywood studios or government entities-have long been able to make reasonably convincing fakes.

The creation of false video and audio content is not new. Background: What Makes Deep Fakes Different?

A combination of technical, legislative, and personal solutions could help stem the problem. The prospect of a comprehensive technical solution is limited for the time being, as are the options for legal or regulatory responses to deep fakes. The opportunities for the sabotage of rivals are legion-for example, sinking a trade deal by slipping to a foreign leader a deep fake purporting to reveal the insulting true beliefs or intentions of U.S.

#Mr deep fakes series

A well-timed and thoughtfully scripted deep fake or series of deep fakes could tip an election, spark violence in a city primed for civil unrest, bolster insurgent narratives about an enemy’s supposed atrocities, or exacerbate political divisions in a society. The array of potential harms that deep fakes could entail is stunning. As a result, the ability to advance lies using hyperrealistic, fake evidence is poised for a great leap forward. As this technology spreads, the ability to produce bogus yet credible video and audio content will come within the reach of an ever-larger array of governments, nonstate actors, and individuals. Rapid advances in deep-learning algorithms to synthesize video and audio content have made possible the production of “deep fakes”-highly realistic and difficult-to-detect depictions of real people doing or saying things they never said or did. Disinformation and distrust online are set to take a turn for the worse.

0 kommentar(er)

0 kommentar(er)